5.2 Simple Linear Regression

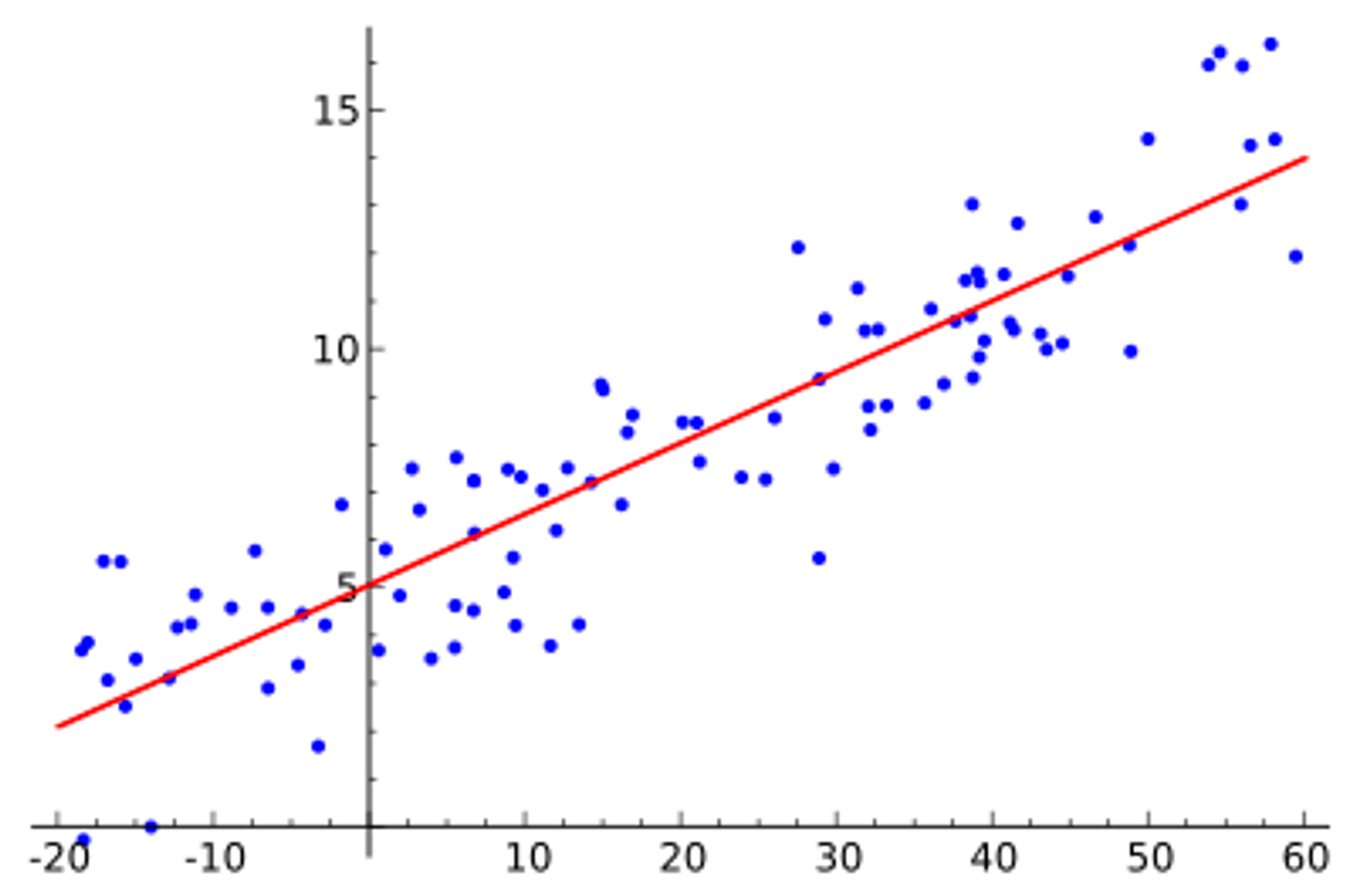

Simple Linear Regression fits a line to the data. The reason it is "simple" is that it only works for two-dimensional data. This means that the line fitted to the data has only one feature. Here's what it could look like:

In this case, the x-axis could represent the input to the machine learning model and the y-axis could represent the output. By fitting a line through the data, we can now create new predictions with inputs we've never seen before by finding the point on the line that corresponds with those inputs. For instance, if our input was 35, our model would output ~10.

Now let's try to get a better intuition of how this model works. First, Let's remind ourselves what the equation for a line is:

and are the two variables that change. is independent and is dependent. However, their values do not determine the shape of the model. Instead, the model maps values of to values for . and , however, are the two numbers that determine the shape of the model. As a reminder, is the slope of the line, and is the y-intercept.

You may remember that parameters also determine the shape of the model, and so in this particular type of model, and are parameters. As a means to standardize our parameters, we'll rename to be and to be , so that the equation is:

Now, for any set of two-dimensional data, our goal is to find optimal values for and such that they produce a line that fits the data well. We'll cover that in the next section.

Previous Section

5.1 What is RegressionCopyright © 2021 Code 4 Tomorrow. All rights reserved.

The code in this course is licensed under the MIT License.

If you would like to use content from any of our courses, you must obtain our explicit written permission and provide credit. Please contact classes@code4tomorrow.org for inquiries.